GenAI feels like another turning point for software development. It’s really just the latest moment in a long, repeating pattern of partial revelation and broad avoidance of how creating software needs to be approached.

Early limits of software development

The “software crisis” of the 1960s was the first time this became apparent – there was a sudden leap in computing power, huge optimism, and a belief that software development would simply scale to match it. It didn’t. Projects ran late and over budget, systems failed in production, and codebases became unmaintainable.

Systems had become too complex for the informal development practices at the time. The response was software engineering as a discipline, the industry recognising that writing software was not just typing instructions but coordinating human understanding. The answer was more structure, more process, more formality. The underlying assumption, though, was that software development could be treated like other branches of engineering: plan carefully, specify up front, then execute

When big up front planning began to struggle to keep up

By the 1990s that model was starting to crack. Several things had shifted. Programming languages and tooling had improved. IDEs, compilers and version control reduced friction. Hardware and compute continued to become cheaper and more powerful. But most importantly, the internet removed distribution friction. You no longer had to ship physical media or install systems on site. Software changes could reach users almost instantly. The cost of change dropped sharply.

Agile emerged in that gap. Not because people suddenly liked stand ups and sticky notes, but because fast feedback through working software was proving to be more effective than detailed upfront plans.

The problem was that the underlying assumption that software was a construction problem never really went away. Most organisations copied the rituals and missed the point. Agile in practice largely became process rather than philosophy.

Software development in the GenAI era

And now GenAI-assisted coding. GenAI has emerged from the continued trend of compute becoming more powerful and cheaper, combined with the sheer volume of data now available as a result of the internet era. The underlying concepts of machine learning have existed for decades, but only recently have the economics and data volumes made it practical.

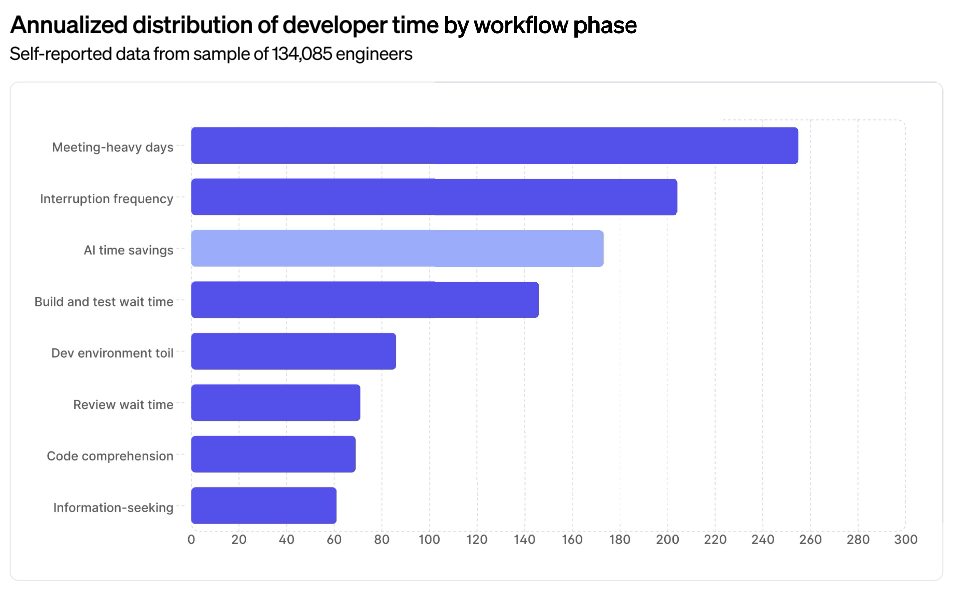

As a result, the distance from intent to working artefact has shrunk again. You can explore ideas, generate alternatives, test assumptions and see consequences in hours rather than days.

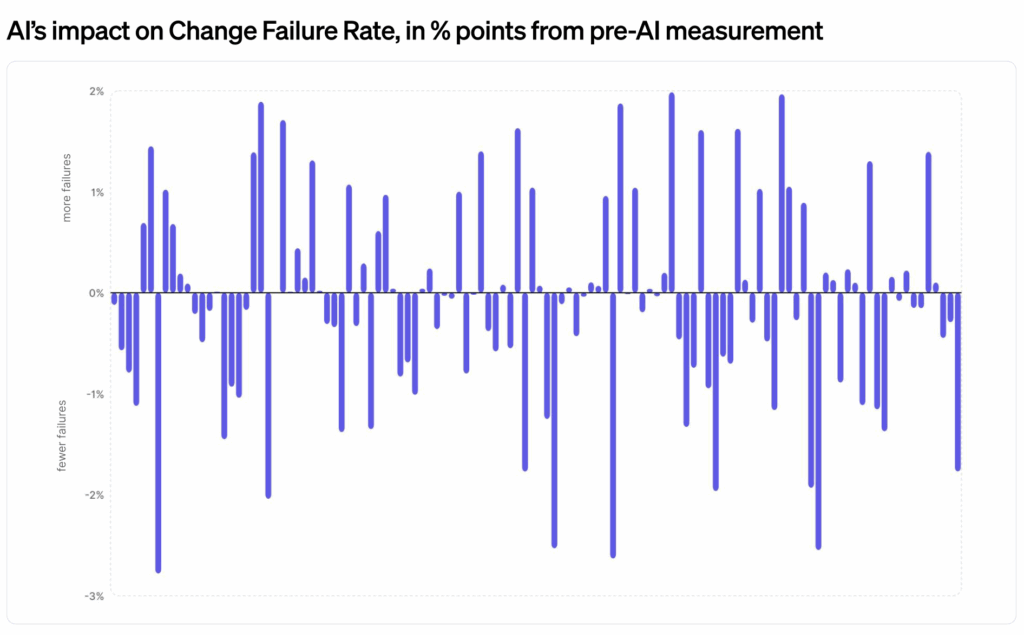

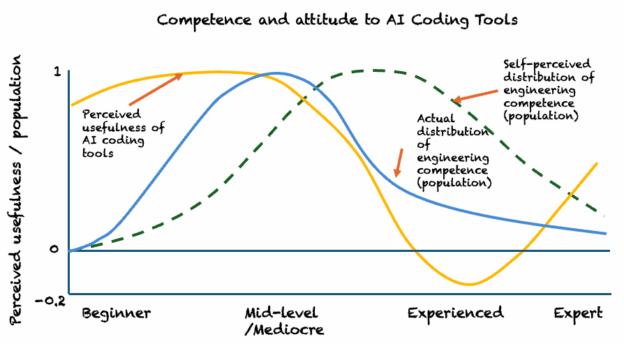

And yet, software engineering is still, to this day, largely treated as a construction problem. Most teams operate as feature factories, fed tickets from a backlog, optimising for “velocity” rather than fast feedback and being able to quickly adapt and respond to new information. Work is broken into tasks, handed off between roles, and judged by output rather than learning.

What building software is really about

At its core, software exists to solve one problem: managing the flow of information. Information is not static. It is provisional, contextual, and constantly changing. Every use of a system creates new information that should influence what happens next. Many of its most important inputs only appear once it is in use.

Software is therefore less a construction problem and more an ongoing conversation between users, systems and decisions. Its quality lies in the strength of its feedback loops and how effectively it enables learning and adjustment as conditions change.

Technology advances keep reducing the cost of building software, but organisations keep failing to adapt how they approach it. Each era exposes that mismatch more visibly, without resolving it.

The organisations that will thrive in this era will be the ones that optimise for learning over output, feedback over plans, and understanding over speed.

Hopefully this time it will be different.